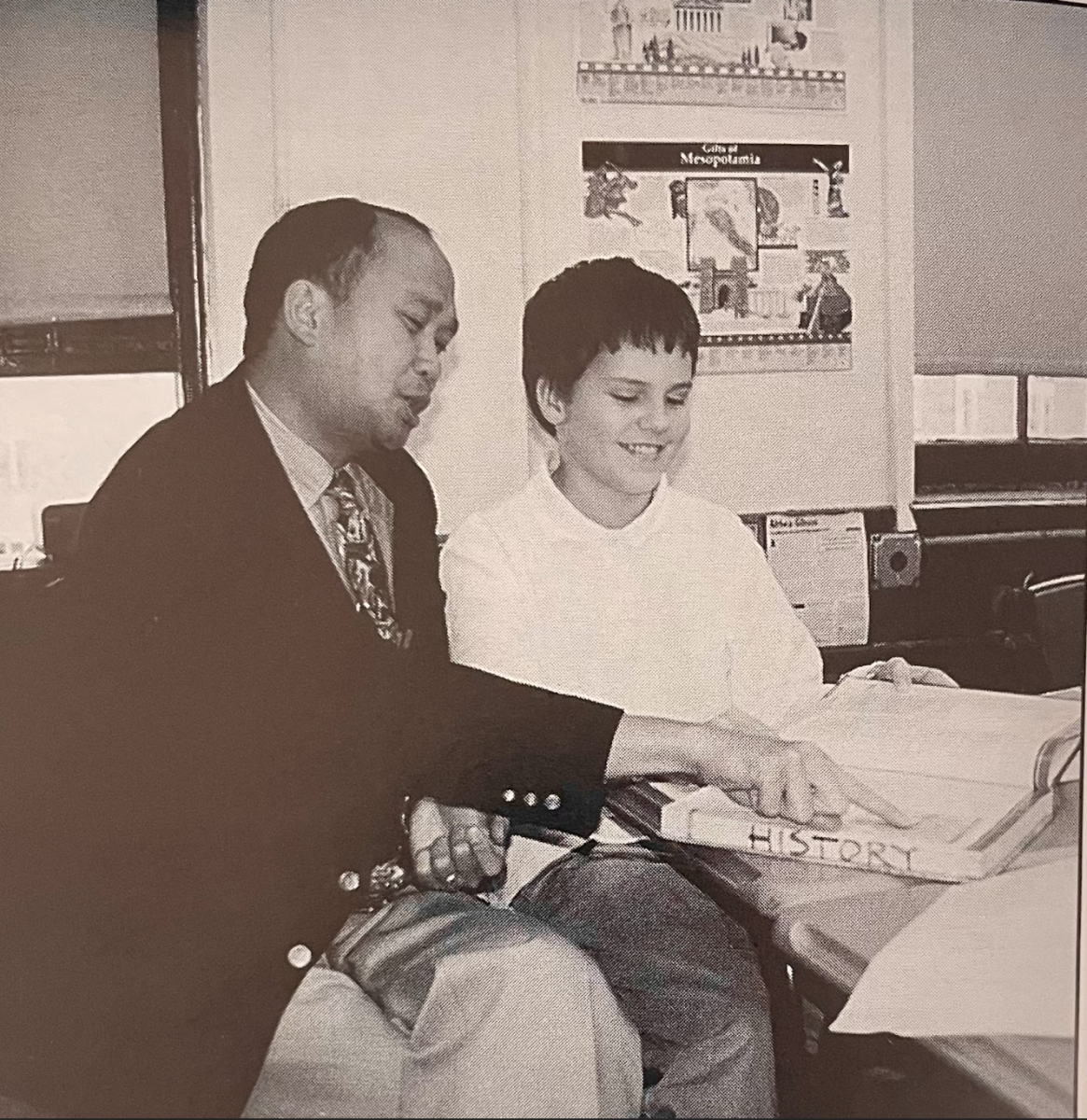

I appreciate the appeal of today’s technological tools, AI in particular. I’ve always found screens transfixing, from my family’s 1970s TV, through a series of advancing technologies (Ataris, VCRs, personal computers, etc.) to today, where I’m often on multiple screens at once. Also, like many at Poly Prep, I frequently feel overburdened and stressed out. Shortly after agreeing to write this piece, I found myself late into a long day with a multiple-choice quiz to write. Surely, AI could have done that for me. So why resist that temptation? Recent studies and statements by experts reveal several reasons for caution.

AI interferes with intellectual development

A group of MIT professors conducted a 2025 study, in which participants were divided into three essay-writing groups. One wrote with ChatGPT’s help, another with Google’s search engine and the final group with no tools, using their “brain only.” Not surprisingly, the ChatGPT group showed the lowest level of brain engagement and “consistently underperformed at neural, linguistic, and behavioral levels.” The longer the group members worked with AI, the more likely they were to simply copy and paste the whole essay. This is a terrible habit to get into as you try to grow as a student.

AI is habit-forming

Unfortunately, a debilitating habit is exactly what you’re likely to develop with AI. In a 2024 study, three professors concluded that among those using large language AI models, “overdependence may foster behavioural patterns similar to addiction, where individuals increasingly defer decisions to ChatGPT and struggle to make choices independently.” This kind of addictive behavior is also seen in non-educational uses of AI, and in extreme cases, people have gotten lost in disastrous relationships with AI chatbots and large language models.

AI puts us into ethical gray areas

Poly is doing its best to develop appropriate guidelines for AI, but it’s hard to draw lines around its use and it’s hard to pull the technology out of the ethical muck from which it came. Large language models were developed by “scraping” huge quantities of writing from the internet, often without the authors’ permission. As novelist Andrea Bertz wrote in The New York Times, “I write my novels to engage human minds — not to empower an algorithm to mimic my voice and spit out commodity knockoffs.” That’s why Anthropic, a company valued at $183 billion, was forced to agree last year to pay $1.5 billion to a group of authors and publishers. And it’s not just big companies that are ethically tarnished by AI. Researchers at the Max Planck Institute recently found that when people were given a task that they could complete with AI, they were more likely to cheat in ways that would benefit themselves. We all like to think that we’re stronger than that, but the evidence is that AI makes us weaker.

AI harms the environment

Many young people seem aware of this particular concern, but they may not be aware of the full story. According to the MIT Technology Review series “Power Hungry: AI and our energy future,” our current AI trajectory (which is dependent on huge data centers that devour massive amounts of water and energy) is likely to keep us dependent on climate-changing fossil fuels like coal and natural gas. The Intergovernmental Panel on Climate Change warned in 2022 that “human-induced climate change is causing dangerous and widespread disruption in nature and affecting billions of lives all over the world…, with people and ecosystems least able to cope being hardest hit.” Globally, air pollution alone has been estimated to cause about nine million premature annual deaths, in a study published in the British medical journal The Lancet. And that was before AI use became widespread.

AI poses existential risk to human civilization

Here, I have to mention the largest threat posed by AI: the risk that it could end human civilization as we know it. Obviously, these are heavy words to write, and I don’t enjoy introducing them into a student newspaper. But I think it’s worse to ignore the issue, which centers around AI developing superhuman intelligence that becomes impossible to control. Are such dire scenarios speculative? Yes. But they are not speculations of kooky or uninformed pundits. They come from some of the people who understand the technology best. Many founding figures and experts in the field signed a one-sentence 2024 statement on AI risk, asserting that “[m]itigating the risk of extinction from AI should be a global priority alongside other societal-scale risks such as pandemics and nuclear war.”

Given the level of this threat, what should one do? I don’t recommend completely closing yourself off to AI. Ignorance could make it harder to contain. I think it’s OK to learn about it, and work with teachers to find ways to engage carefully and responsibly with it (Poly has courses that help you do just that). But please, approach AI with extreme caution. The technology is disruptive, poorly regulated and has the potential to do great harm to you and to all of society. Every time we use AI, we risk adding to the problems listed above. I find AI tempting too, but the more I look into it, the more certain I am that we should resist as much as possible. This technology, which seems so harmless on our screens, may be one of the most dangerous inventions ever unleashed.